Want to know everything about Data Integration Framework? Keep reading this article. We provide all details about the process and how it assists companies with data analysis.

Three… two… one…

Lift Off!

Last month, SpaceX launched the Starlink satellite, around 300 small satellites that will circle the earth in the lower orbit. The purpose of these satellites is to provide the internet to everyone at a much lower cost and a much higher speed.

These satellites are a scientific wonder because thanks to them, stargazers are now able to see star trails almost every second day. There is even an app that tells about the right time to find the Starlink satellites in orbit.

This blog however is not about them. We are not going to discuss stars, star links, or satellites. We are going to discuss connectivity and how important data connectivity is in this modern age.

Do you know how many data points are gathered within a microsecond just to make sure that every satellite lifts off according to plan? Billions of data points!

These inputs are coming from a variety of sources. First, there are the rocket propellers, the jet engines, the computers, the condition of the rocket’s structure, the wind turbines to reduce heat in the area, and the sensors installed around the lift-off tower to monitor every aspect of the rocket.

The amount of data is produced is ENORMOUS! A feat in itself.

Evaluating and monitoring all this data is not an easy job. Most of this data is analog and needs to be converted into data points using an analog to digital converter (ADC). Then, the data is stored in databases and streamed to the monitor screens present in the control room. A slight delay in data connectivity and the whole mission can fail. This is where data integration software helps!

Data integration software allows robust transfer of data from the lift-off tower to the control tower within a split second – usually nanoseconds.

Since data integration is such a crucial part of the whole aerospace manufacturing industry that it has its own set of processes. Let’s look at the complete data integration framework that collects and delivers insights across this industry.

Key Takeaways of Data Integration Framework

- Why is data integration important for your business?

- What is the data integration framework and how can it help?

- How data integration tools improve integration processes

- When should you buy a data integration tool?

Data Integration Framework Explained

Organizations use data all the time. It is everywhere around us. The phones we use, the content we watch, the things we do online. From working on project management tools to streaming YouTube, everything is calculated and collected in data points. It is vast, varied, voluminous, and velocitized.

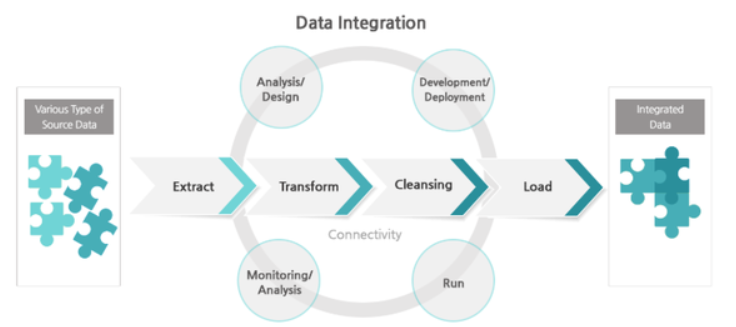

Since monitoring this data can reveal crucial insights to businesses, data integration follows a framework to extract, cleanse, store, and visualize this data.

Here is the complete data integration framework in detail

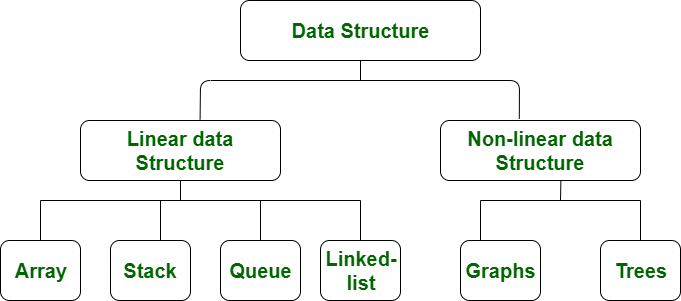

What are Data Structures?

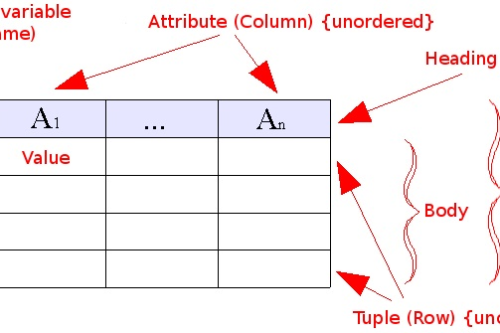

Almost all data available in organizations follows a linear data structure pattern. This data is fetched from the application and then stored in storage devices in tables or stacks.

Source: GeeksforGeeks

For example, in MySQL, the data is stored in tables that are linked to other tables through foreign keys. Each data unit can be fetched using SQL commands.

SELECT * FROM [table name] WHERE name != “Bob” AND phone_number = ‘3444444’ order by phone_number;

Data integration follows a complete process including extracting data from the source application and then loading it into the destination storage.

This process is also called Extract, Transform, and Load (ETL). You can learn more about the ETL process in this blog.

Data Source

A data source is usually the primary unit where the data is stored. For example, if the data is taken from a website, the data source will be the application recording that data. It can be MySQL, Google Analytics, HotJar, HubSpot, or any other application for that purpose. They will store the data points in their own repository. This information can be in PDF, Excel, CSV, Doc, Text, or any other format.

If the data is from a legacy system, the data source will be in Cobol .idx of IBM DB 2 .IXF file format.

Data Extraction

This data is extracted through these applications using connectors. In some modern applications, the data can be easily exported in JSON or CSV file format. However, in most legacy applications the data can only be exported with the help of custom connectors. In some cases, creating compatible data connectors can take days at an end.

According to Computer Weekly, almost 90% of organizations still use legacy systems for their data management. That’s why the use of data integration platforms that offer connectors for legacy systems is crucial for them.

Data Cleansing

The data is then cleaned before being loaded to the destination storage, which is a data warehouse in most cases. Since data can’t be cleansed at the source, it requires a staging area. ETL software offers this staging area. In the staging area, users can apply data cleansing operations such as filters, joins, aggregate, normalize/denormalize, and many more transformations.

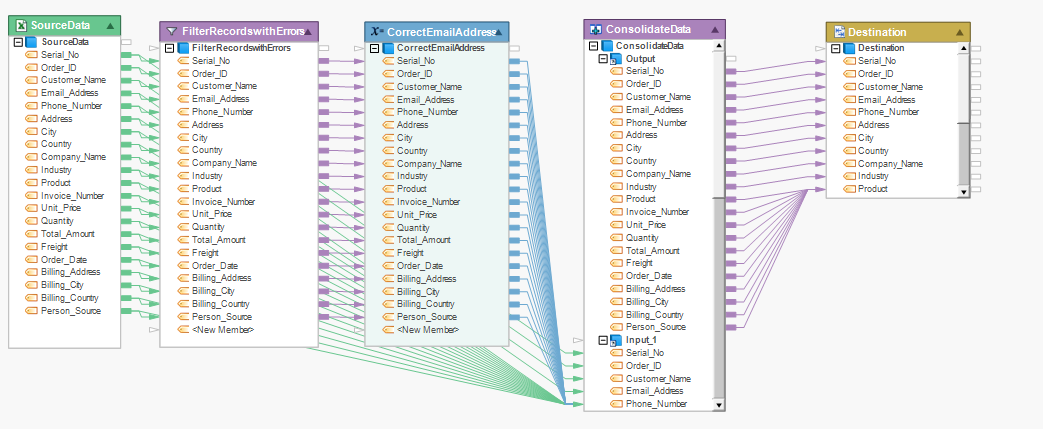

Cleansing data on Astera Centerprise. Source: Astera.com

Data Profiling

Similarly, this data is processed and examined for getting summarized information. Data profiling helps in identifying any data quality issues that occur when the data is loaded to the destination storage.

ETL software keeps a complete data profile and checks for data quality issues when it is loaded to the destination data warehouse.

Data Loading

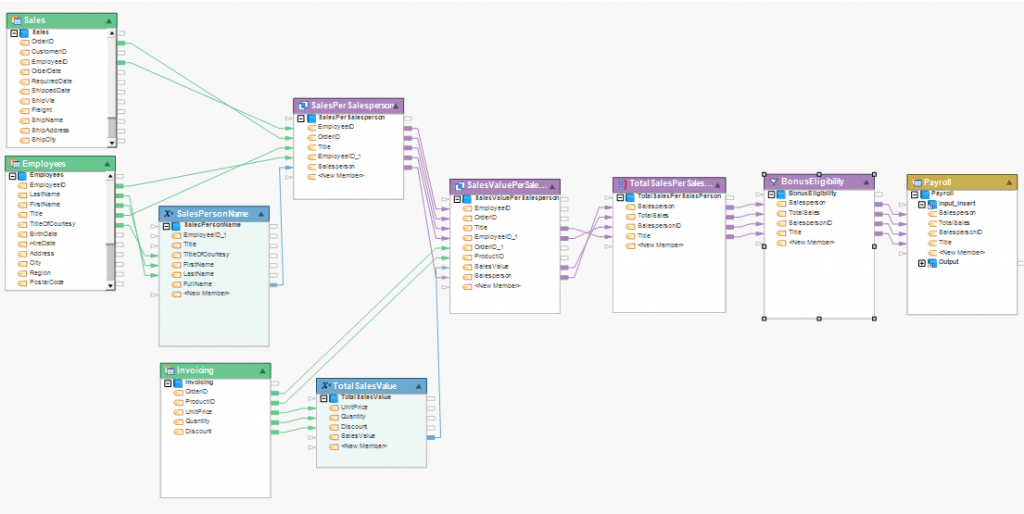

Finally, this cleansed data is loaded to the destination data warehouse. In most modern data integration platforms, users can create data maps. Data mapping provides a blueprint for how data should flow from source to destination. ETL software leverages these mappings to automate data loading without human intervention.

With these data integration tools, users can schedule data loading times. Each job will run at its designated time and only load the new data that was not previously loaded to the destination system to ensure accuracy, consistency, and completeness.

Data Flows

Creating data mapping in Astera Centerprise. Source: Astera

Dataflows define the path that data takes to get from the source to the destination. The path is also called the data map. Modern ETL software allow users to create data maps using intuitive GUI.

Data Connectors

Most of the data migration tasks take place using custom data connectors. Today, ETL software offers pre-made data connectors that users can use to connect multiple databases and applications. For example, if you want to load data from a MySQL database to a SQL database, you will need a medium that converts the data from MySQL to SQL. Data connectors let you do that. They create connectivity of applications on source systems with the applications on destination systems.

What’s Next In Data Integration Framework?

After you are aware of how the whole data integration framework works, the next step is to determine data integration sources, and then use them for the purpose that works for your.

Most companies use data integration to consolidate data on a single platform because it helps them make business projections using OLAP and business intelligence processes.

However, data integration is not only limited to business projections. Healthcare companies use data integration to improve their current processes or to develop new vaccines like they are doing right now with COVID vaccines.

Similarly, construction industry uses the data integration framework to improve the structural design of buildings through various metrics and data indicators.

Even the digital firms like Netflix, Amazon Prime, and various others use data integration on a regular basis for search and choice recommendation engines. In short, the data integration framework powers everything that is linked to data.

But it all starts with the right data integration tool that you can use to consolidate, cleanse, and prepare data for the project that you have in mind.

Digital Vidya has created an insightful analysis of how data integration framework can help companies analyze data. Here you can see how it is being used in various industries around the world.

Source: Digital Vidya

Bottom-line

Astera Centerprise is a data integration software that offers over 40 data connectors for migrating data from both legacy and modern systems. Astera Centerprise users can use these connectors for all their data integration and migration needs. They can integrate data in many ways including on-premise to on-premise, on-premise to cloud, and cloud to cloud using a code-free environment.