Did you know, data-driven decision-making can help companies increase their productivity by almost 6%? Yet, hardly 0.5% of the world’s data is analyzed and used. This means businesses all over the world are losing out on huge opportunities just because they are unable to access the right data at the right time.

This is where data extraction can help you. It allows you to extract meaningful information hidden within unstructured data sources like IoT data, web data, social media data, etc.

All these sources have an internal structure but can’t be fit neatly into a relational database without data massaging.

Here’s an example to help you better understand.

Suppose your company is experiencing a decline in revenue because of employee turnover. You maintain a spreadsheet that shows the list of all employees and employee attrition status for every month.

To analyze the trend in the attrition rate, you may want to extract the rows with attrition status and aggregate them. This will help ascertain whether or not you can retain your employees and develop necessary strategies (such as improving your employee benefits program) to reduce attrition.

But exactly what is data extraction? And, what are some of the best data extraction tools out there?

You’ll find out in this article.

What is Data Extraction?

Data extraction is the process of retrieving data from numerous source systems.

Often, businesses extract data with the purpose of processing it further. Or, they transfer their data to a repository (like a data warehouse or a data lake) for storage.

During this migration, it is quite common to transform the data as well.

For instance, you may carry out calculations on the data (such as aggregating sales data) and store the result in your repository.

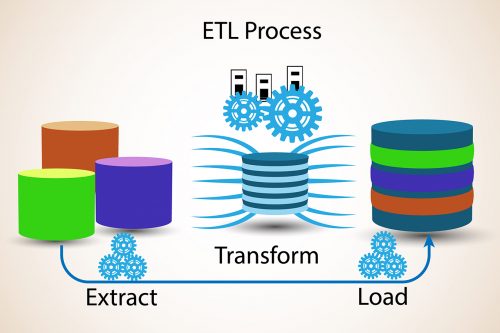

This process collectively is called Extraction, Transformation, and Loading (ETL). And extraction is the first key step in ETL.

Data extraction helps identify which data is most valuable for attaining the business goal, driving the overall ETL data integration process.

Data Extraction Challenges

Although data extraction offers a number of benefits to enterprises, it has its own set of challenges.

Some of these challenges are:

-

Unstructured/Semi-structured Data

The first challenge is to extract unstructured/semi-structured data because it lacks basic structure. Such data needs to be reviewed or formatted before any extraction can occur.

For instance, you may want to extract data from notes manually written by your sales representatives about prospects they have communicated with. Every sales representative might have taken sales notes in a different manner that would need to be reviewed before running through a data extraction tool.

-

Compatibility Issues

Another challenge is to bring together data that are not compatible. For example, suppose one source has structured data (such as phone numbers or ZIP codes) while the other system has unstructured data (such as text files or email messages).

In case you are extracting data to further analyze it, you’re likely to perform ETL process so that data from both sources become compatible and you can perform analysis on it together in a single repository.

-

Quality and Security of Data

Maintaining data quality and security is another challenge that you might face during the extraction process.

With raw data coming in from multiple sources data quality issues such as dummy values, redundancy or conflicting data are common. Thus, you have to clean and transform this data after extraction to make it usable.

Often your data might include sensitive information. For example, it may contain personally identifiable information or any other info that’s highly regulated.

In this case, the extraction process would involve eliminating sensitive information. Data security can also be a major stumbling block during transfers. For instance, you may have to encrypt the data in transit as a safety measure.

Data Extraction Techniques

The data extraction method you choose depends strongly on the source system as well as your business requirements in the target data warehouse environment.

The following are the two types of data extraction techniques:

-

Full Extraction

In this technique, the data is extracted fully from the source. This means you don’t have to keep track of changes to the data source since the last successful extraction. This is because there is no data left behind after each extraction.

The source data is provided in its existing condition and no extra logical information (such as timestamps) needs to be applied to provide context for inputs.

An export file of an individual table or a remote SQL statement scanning the entire source table could be an example of full extraction.

-

Incremental Extraction

In this technique, only the data that has been altered since a distinct past event is extracted. This past event could be the last successful extraction or a more intricate business event such as the last booking day of a financial period.

However, there’s one big challenge when it comes to incremental extraction.

There must be some information to identify all the altered data since this particular past event to identify the delta change.

The source data itself can provide this information (such as an application column that reflects the last-altered timestamp).

Or, a change table could offer this information by using a suitable additional mechanism to monitor the changes besides the initiating transactions.

These additional mechanisms are discussed in the next section.

Data Capture Methods

To efficiently identify and extract only the most recently changed data, the following three data capture methods are used:

-

Timestamps

In some operational source systems, the tables have timestamp columns that specify the time and date at which a particular row was last altered. As a result, it becomes easy to identify the latest data using these timestamp columns.

For example, column names such as LAST_UPDATE are commonly used.

However, sometimes the timestamp information is not available in an operational system. In such a scenario, you’ll not always be able to alter the system to include timestamps.

Such alteration would first necessitate altering the source system’s tables to include a new timestamp column. Next, it would need to generate a trigger to update the timestamp column after every action that changes a given row.

-

Partitioning

The second data capture technique is partitioning in which the source tables are partitioned along a date key. This date key helps easily identify new data.

For instance, if you are extracting data from a sales table that’s partitioned by month, you can easily identify the current month’s data.

-

Triggers

The third method to capture data is via triggers that can be generated in source systems to keep track of newly updated records.

You can even use triggers together with timestamp columns to ascertain the exact time and date when a given row was last altered.

All you have to do is to create a trigger on each source table that needs change data capture. After each Data Manipulation Language (DML) statement that is performed on the source table, this trigger apprises the timestamp column with the recent time. Consequently, the timestamp column offers the exact time and date when a given row was last altered.

Data Extraction Tools

Looking for the best data extraction tool for your business? We’ve compiled the top 3 tools to help you get started.

-

Astera Centerprise

Astera Centerprise allows you to accomplish in minutes what data extraction teams do in days and/or hours. It’s a powerful end-to-end data integration software that allows you to integrate, clean, and transform data -even when you don’t know how to code.

It automates your routine data integration, cleaning, and transformation via built-in workflow automation, time and event-based triggers, and job scheduling. Additionally, the user-friendly interface streamlines data extraction, allowing business users to build extraction logic in a completely code-free manner.

-

Stitch

Stitch is a cloud-first, developer-focused tool for quickly transferring data. Its Data Loader provides a fast, fault-tolerant path to data extraction from more than 90 sources. It offers advanced ETL features and support to reduce the cost and complexity of your data flows.

Whether you’re supporting multiple environments, performing data migration, or maintaining a hybrid data stack, Stitch can help you route your data sources to different destinations to meet your needs.

-

ETL Works

ETLWorks allows you to seamlessly extract and load data from and to all your sources and targets, regardless of the format, volume, and location. You can parse and create nested XML and JSON documents.

Plus, you can connect it to all your cloud and on-premise data sources, whether they are behind a firewall, semi-structured, or unstructured.

Conclusion

Every single day, a significant volume of data is exchanged by enterprises. This makes manual data extraction a challenging task.

Using a data extraction tool like Astera Centerprise can automate the extraction process and makes crucial business data available in a timely manner.

As a result, you are able to make better decisions and streamline your business operations.

You can sign up for a free 14-day trial of Astera Centerprise right here.