Business decisions are based on intelligence. And intelligence comes through data. The right amount of data, and sufficient amounts of it, to churn into your system so you can get meaningful insights and make profitable decisions for your business.

But to make data-driven decisions, businesses need to obtain vast volumes of data from a variety of sources. That’s where the data ingestion process comes in.

What is Data Ingestion?

Here is a paraphrased version of how TechTarget defines it: Data ingestion is the process of porting-in data from multiple sources to a single storage unit that businesses can use to create meaningful insights for making intelligent decisions.

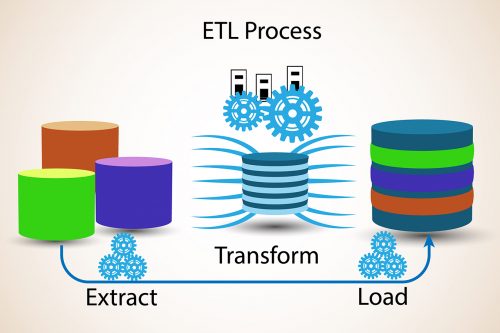

Technically, data ingestion is the process of transferring data from any source. But that’s not always the case as businesses have multiple units, each having its own applications, file types, and systems. To make business decisions, companies port-in data from these heterogeneous sources onto a single storage medium, typically a data warehouse or a data mart after passing it through the Extract, Transfer, Load (ETL) process.

For example, they can transfer data from multiple isolated databases, spreadsheets, delimited files, and PDFs. Later, this information can be cleansed, organized, and run through OLAP for business forecasting.

Where Does this Massive Amount of Data Come From?

An average organization gets data from multiple sources. For starters, it gets leads from websites, mobile apps, and third-party lead generators. This data is available in the CRM and usually held by the marketing department. Then, the organization has a list of converted customers, usually available with the sales department. Similarly, the queries and chat logs of customers and visitors are available with the customer support department. The quality assurance department keeps a list of customers who have found a bug or have asked for a customized product. The business development team has a list of its own that usually consists of potential customers who have been shown the product demo and are in the conversion funnel.

All this data combined means over a million data points that need to be turned into comprehensible insights that the senior management can use for future decisions.

Moreover, the instance we gave is just of the internal data of a single organization. What if the organization acquires a startup? The data will double or even quadruple if it belongs to more than one acquisition. On an average, a merger will include more than a million data points to the system. Many enterprises have multiple subsidiaries operating under them. All this data becomes enormous to handle, let alone obtain useful insights from, unless it is ingested to a data warehouse in a refined format.

Data Ingestion Sources

Data ingestion sources can be from internal (business units) or external (other organization) sources that need to be combined on to a data warehouse. The sources can include:

- Databases

- XML sources

- Spreadsheets

- COBOL, EasyTrieve, PL/SQL, Transact-SQL, and RPG file formats

- Flat files

- ERP data from SAP, Oracle, OpenERP, and other sources

- Weblogs and cookies

Data Ingestion Destination

Similarly, the destination of a data ingestion process can be a data warehouse, a data mart, a database silos, or a document storage medium. In summary, a destination is a place where your ingested data will be placed after transferring from various sources.

Data Ingestion Process

Data ingestion refers to moving data from one point (as in the main database to a data lake) for some purpose. It may not necessarily involve any transformation or manipulation of data during that process. Simply extracting from one point and loading on to another.

Each organization has a separate framework for data ingestion, depending upon its objective.

Data Ingestion Approaches

Data ingestion has three approaches, including batch, real-time, and streaming. Let’s learn about each in detail.

- Batch Data Processing

In batch data processing, the data is ingested in batches. Let’s say the organization wants to port-in data from various sources to the warehouse every Monday morning. The best way to transfer data will be through batch processing. Batch processing is an efficient way to process large amounts of data at specified intervals.

- Real-time Data Processing

In real-time data processing, data will be processed in smaller chunks, that too in runtime.

Most real-time data is opted by companies that are relying on runtime data processing such as national energy grids, banks, and financial companies.

- Streaming Data Processing

Unlike real-time data, streaming data is a continuous process. This type of data ingestion process is mostly used for forex and stock market valuations, predictive analytics, and online recommender systems.

- Lambda Approach

Next, we have the lambda approach. It manages both processes, batch and streaming, to balance latency, and throughput. The use of Lambda architecture is on the rise due to the widening of Big data and the need for real-time analytics.

Challenges Companies Face While Ingesting Data

Now that you are aware of the approaches data can be ingested into a medium, here is a list of problems that companies often face while ingesting data and how a data ingestion tool can help solve that challenge.

- Maintaining Data Quality

The biggest challenge of ingesting data from any source is to maintain data quality and completeness. It is critical for business intelligence transactions that you will be performing on your data. However, since ingested data is not used for BI on an ad-hoc basis, data quality issues often go undiscovered. You can minimize this by using a data ingestion tool that provides added quality features.

- Syncing data from multiple sources

Data is available in multiple formats in an organization. As the organization grows, more data will get piled up, and soon it will become hard to manage. Syncing all this data or ingesting it on a single warehouse is the solution. But since this data is available from multiple sources, extracting it can be a problem. This can be solved by data ingestion tools that offer multiple interfaces to extract, transform, and load the data.

- Creating a Uniform Structure

To make business intelligence functions work properly, you will need to create a uniform structure by using data mapping features that can organize the data points. A data ingestion tool can cleanse, transform, and map the data to the right destination.

Selecting the Right Data Ingestion Tool For Business

Now that you are aware of the various types of data ingestion challenges, let’s learn the best tools to use.

- Astera Centerprise

Astera Centerprise is a visual data management and integration tool to build bi-directional integrations, complex data mapping, and data validation tasks to streamline data ingestion. It offers a high-throughput, low-latency platform for handling batch and near real-time data feeds. It also allows swift data transfer to Salesforce, MS Dynamics, SAP, Power BI, and other visualization and OLAP software. Moreover, through its workflow automation capability, users can smoothly automate schedule, sync, and deploy tasks easily. It is used by enterprises and banks including the financial giant Wells Fargo.

- Apache Kafka

Apache Kafka is a GUI based, open-source, fault-tolerant, cluster-centric data streaming platform that offers high-throughput messaging across devices. It helps connect multiple data sources to either single or multiple destinations in an organized format. It is already in use by tech giants such as Netflix, Walmart, and others.

- Fluentd

Fluentd is another open-source data ingestion platform that lets you unify data onto a data warehouse. It allows data cleansing tasks such as filtering, merging, buffering, data logging, and bi-directional JSON array creation across multiple sources and destinations. However, the drawback is that it doesn’t allow workflow automation, which makes the scope of the software limited to a certain use.

- WaveFront

Wavefront is a paid platform for data management tasks such as ingesting, storing, visualizing, and transforming. It uses batch data streaming for high-dimensional data analysis. Wavefront can ingest millions of data points every second. It offers features such as big data intelligence, enterprise application integration, data quality, and master data management. The data ingestion feature is just one of the many data management features Wavefront has to offer.

Conclusion

In essence, data ingestion is essential for intelligent data management and gathering business insights. It allows medium and large enterprises to maintain a federated data warehouse by ingesting data in real-time and making well-informed decisions through ad hoc data delivery.